System and Deployment Architecture Team

João Netto

D.Sc.

Juliano Araujo Wickboldt

Ph.D.

Marcos Tomazzoli Leipnitz

D.Sc.

Francisco Paiva Knebel

M.Sc. Student

Fernando Mello de Barros

B.Sc. Student

Rafael Ventura Trevisan

B.Sc. Student

Our objectives

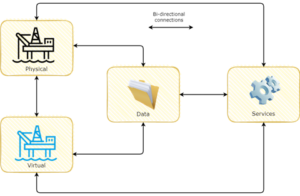

The main objective of this working group is to define a conceptual system architecture for the next generation of digital twins for oil production field. Initially, we understand this architecture is going to be inspired by the Five-dimension DT model proposed by Tao et al. in the article “Digital twin driven prognostics and health management for complex equipment”. These dimensions include: (i) a Physical entity with various functional subsystems and sensory devices, (ii) a Virtual high fidelity digital model of the Physical entity, (iii) a set of Services that optimize operations of the Physical element and calibrate parameters of its Virtual model, (iv) Data acquired from both the Physical and Virtual elements, domain knowledge, as well as any fused, transformed, and enriched information extracted from the raw data, and (v) bidirectional Connections, representing communications and flow of encoded information among the other four dimensions.

Besides defining the conceptual system architecture, this working group will also delve into the details of implementing and deploying the conceptual components defined by leveraging cloud-based technologies to achieve the scale, robustness, and responsiveness required by modern Digital Twin systems.

Results and Contributions

A study on cloud and edge computing for the implementation of digital twins in the oil & gas industries

Francisco Paiva Knebel, Rafael Trevisan, Givanildo Santana do Nascimento, Mara Abel and Juliano Araujo Wickboldt. Computers & Industrial Engineering.

Cloud-based data acquisition based on MQTT

Rafael Antonio Ventura Trevisan, Francisco Paiva Knebel, Juliano Araújo Wickboldt and Mara Abel. Network and Service Management and Operation.

What we are currently working on

Intelligent orchestration of MQTT-based data acquisition on a heterogeneous cloud and edge deployment

We have already explored the auto-scaling ability of MQTT brokers to implement flexible and efficient data acquisition for Digital Twin systems over a cloud-based homogeneous infrastructure. However, in many cases acquiring data from complex distributed infrastructures (e.g. an oil and gas production plant) requires considering heterogeneous communications and computing infrastructures distributed in the cloud-edge continuum. Therefore, we are currently working on an expansion to the auto-scaling data acquisition system to work autonomously on a heterogeneous cloud and edge deployment.

Integrated orchestration of data acquisition and processing based on cloud and edge computing

The implementation of data-centric Digital Twins requires not only efficient data acquisition among participant components of the system, but also highly sophisticated processing (e.g., filtering, transforming, aggregating) and storage. One approach to construct such a system is to rely on event streaming platforms (e.g., Apache Kafka). However, transporting data through a communication infrastructure using, for instance, message brokering protocols like MQTT, and processing these streams of messages as events are two separate subsystems that need to work in harmony to benefit the overall Digital Twin performance. Therefore, we are currently looking into ways of auto-scaling MQTT communication for data acquisition integrated with (auto-scaling) event processing with Apache Kafka considering a realistic cloud edge deployment and representative Digital Twin workloads.